This post is about automatically generating sitemaps. I chose this topic, because it is fresh in my mind as I have recently started using sitemaps for pickat.sg After some research I came to the conclusion this would be a good thing – at the time of the posting Google had 3171 URLs indexed for the website (it has been live for 3 months now), whereby after generating sitemaps there were 87,818 URLs submitted. I am curios how many will get indexed after that…

So because I didn’t want to introduce over 80k URLs manually, I had to come up with an automated solution for that. Because Pickat mobile app was developed with Java Spring, it came easy to me to selectsitemapgen4j

As I refer to the article from others, you may see different methods, please focus on the logic.

Maven depedency

Check out the latest version here:

|

1

2

3

|

com.google.code

sitemapgen4j

1.0.1

|

The podcasts from pickat.sg have an update frequency (DAILY, WEEKLY, MONTHLY, TERMINATED, UNKNOWN) associated, so it made sense to organize sub-sitemaps to make use of the lastMod andchangeFreq properties accordingly. This way you can modify the lastMod of the daily sitemap in the sitemap index without modifying the lastMod of the monthly sitemap, and the Google bot doesn’t need to check the monthly sitemap everyday.

Generation of sitemap

Method : createSitemapForPodcastsWithFrequency – generates one sitemap file

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

|

/** * Creates sitemap for podcasts/episodes with update frequency * * @param updateFrequency update frequency of the podcasts * @param sitemapsDirectoryPath the location where the sitemap will be generated */public void createSitemapForPodcastsWithFrequency( UpdateFrequencyType updateFrequency, String sitemapsDirectoryPath) throws MalformedURLException { //number of URLs counted int nrOfURLs = 0; File targetDirectory = new File(sitemapsDirectoryPath); WebSitemapGenerator wsg = WebSitemapGenerator.builder("http://www.podcastpedia.org", targetDirectory) .fileNamePrefix("sitemap_" + updateFrequency.toString()) // name of the generated sitemap .gzip(true) //recommended - as it decreases the file's size significantly .build(); //reads reachable podcasts with episodes from Database with List podcasts = readDao.getPodcastsAndEpisodeWithUpdateFrequency(updateFrequency); for(Podcast podcast : podcasts) { String url = "http://www.podcastpedia.org" + "/podcasts/" + podcast.getPodcastId() + "/" + podcast.getTitleInUrl(); WebSitemapUrl wsmUrl = new WebSitemapUrl.Options(url) .lastMod(podcast.getPublicationDate()) // date of the last published episode .priority(0.9) //high priority just below the start page which has a default priority of 1 by default .changeFreq(changeFrequencyFromUpdateFrequency(updateFrequency)) .build(); wsg.addUrl(wsmUrl); nrOfURLs++; for(Episode episode : podcast.getEpisodes() ){ url = "http://www.podcastpedia.org" + "/podcasts/" + podcast.getPodcastId() + "/" + podcast.getTitleInUrl() + "/episodes/" + episode.getEpisodeId() + "/" + episode.getTitleInUrl(); //build websitemap url wsmUrl = new WebSitemapUrl.Options(url) .lastMod(episode.getPublicationDate()) //publication date of the episode .priority(0.8) //high priority but smaller than podcast priority .changeFreq(changeFrequencyFromUpdateFrequency(UpdateFrequencyType.TERMINATED)) // .build(); wsg.addUrl(wsmUrl); nrOfURLs++; } } // One sitemap can contain a maximum of 50,000 URLs. if(nrOfURLs <= 50000){ wsg.write(); } else { // in this case multiple files will be created and sitemap_index.xml file describing the files which will be ignored // workaround to resolve the issue described at http://code.google.com/p/sitemapgen4j/issues/attachmentText?id=8&aid=80003000&name=Admit_Single_Sitemap_in_Index.patch&token=p2CFJZ5OOE5utzZV1UuxnVzFJmE%3A1375266156989 wsg.write(); wsg.writeSitemapsWithIndex(); }} |

The generated file contains URLs to podcasts and episodes, with changeFreq and lastMod set accordingly.

Snippet from the generated sitemap_MONTHLY.xml:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

<?xml version="1.0" encoding="UTF-8"?> <url> <lastmod>2013-07-05T17:01+02:00</lastmod> <changefreq>monthly</changefreq> <priority>0.9</priority> </url> <url> <lastmod>2013-07-05T17:01+02:00</lastmod> <changefreq>never</changefreq> <priority>0.8</priority> </url> <url> <lastmod>2013-03-11T15:40+01:00</lastmod> <changefreq>never</changefreq> <priority>0.8</priority> </url> .....</urlset> |

Generation of sitemap index

After sitemaps are generated for all update frequencies, a sitemap index is generated to list all the sitemaps. This file will be submitted in the Google Webmaster Toolos.

Method : createSitemapIndexFile

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

/** * Creates a sitemap index from all the files from the specified directory excluding the test files and sitemap_index.xml files * * @param sitemapsDirectoryPath the location where the sitemap index will be generated */public void createSitemapIndexFile(String sitemapsDirectoryPath) throws MalformedURLException { File targetDirectory = new File(sitemapsDirectoryPath); // generate sitemap index for foo + bar grgrg File outFile = new File(sitemapsDirectoryPath + "/sitemap_index.xml"); //get all the files from the specified directory File[] files = targetDirectory.listFiles(); for(int i=0; i < files.length; i++){ boolean isNotSitemapIndexFile = !files[i].getName().startsWith("sitemap_index") || !files[i].getName().startsWith("test"); if(isNotSitemapIndexFile){ SitemapIndexUrl sitemapIndexUrl = new SitemapIndexUrl("http://www.podcastpedia.org/" + files[i].getName(), new Date(files[i].lastModified())); sig.addUrl(sitemapIndexUrl); } } sig.write();} |

The process is quite simple – the method looks in the folder where the sitemaps files were created and generates a sitemaps index with these files setting the lastmod value to the time each file had been last modified (line 18).

Et voilà sitemap_index.xml:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

<?xml version="1.0" encoding="UTF-8"?> <sitemap> <lastmod>2013-08-01T07:24:38.450+02:00</lastmod> </sitemap> <sitemap> <lastmod>2013-08-01T07:25:01.347+02:00</lastmod> </sitemap> <sitemap> <lastmod>2013-08-01T07:25:10.392+02:00</lastmod> </sitemap> <sitemap> <lastmod>2013-08-01T07:26:33.067+02:00</lastmod> </sitemap> <sitemap> <lastmod>2013-08-01T07:24:53.957+02:00</lastmod> </sitemap></sitemapindex> |

If you liked this, please show your support by helping us with Podcastpedia.org

We promise to only share high quality podcasts and episodes.

Source code

- SitemapService.zip – the archive contains the interface and class implementation for the methods described in the post

Batch Job Approach

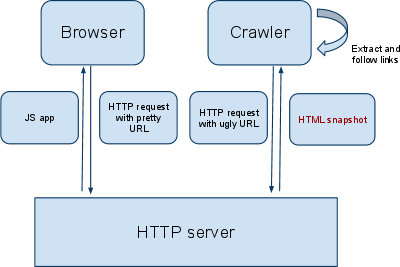

Eventually, I ended-up doing something similar to the first suggestion. However, instead of generating the sitemap every time the URL is accessed, I ended-up generating the sitemap from a batch job.

With this approach, I get to schedule how often the sitemap is generated. And because generation happens outside of an HTTP request, I can afford a longer time for it to complete.

Having previous experience with the framework, Spring Batch was my obvious choice. It provides a framework for building batch jobs in Java. Spring Batch works with the idea of “chunk processing” wherein huge sets of data are divided and processed as chunks.

I then searched for a Java library for writing sitemaps and came-up with SitemapGen4j. It provides an easy to use API and is released under Apache License 2.0.

Requirements

My requirements are simple: I have a couple of static web pages which can be hard-coded to the sitemap. I also have pages for each place submitted to the web site; each place is stored as a single row in the database and is identified by a unique ID. There are also pages for each registered user; similar to the places, each user is stored as a single row and is identified by a unique ID.

A job in Spring Batch is composed of 1 or more “steps”. A step encapsulates the processing needed to be executed against a set of data.

I identified 4 steps for my job:

- Add static pages to the sitemap

- Add place pages to the sitemap

- Add profile pages to the sitemap

- Write the sitemap XML to a file

Step 1

Because it does not involve processing a set of data, my first step can be implemented directly as a simple Tasklet:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

public class StaticPagesInitializerTasklet implements Tasklet { private static final Logger logger = LoggerFactory.getLogger(StaticPagesInitializerTasklet.class); private final String rootUrl; @Inject private WebSitemapGenerator sitemapGenerator; public StaticPagesInitializerTasklet(String rootUrl) { this.rootUrl = rootUrl; } @Override public RepeatStatus execute(StepContribution contribution, ChunkContext chunkContext) throws Exception { logger.info("Adding URL for static pages..."); sitemapGenerator.addUrl(rootUrl); sitemapGenerator.addUrl(rootUrl + "/terms"); sitemapGenerator.addUrl(rootUrl + "/privacy"); sitemapGenerator.addUrl(rootUrl + "/attribution"); logger.info("Done."); return RepeatStatus.FINISHED; } public void setSitemapGenerator(WebSitemapGenerator sitemapGenerator) { this.sitemapGenerator = sitemapGenerator; }} |

The starting point of a Tasklet is the execute() method. Here, I add the URLs of the known static pages of CheckTheCrowd.com.

Step 2

The second step requires places data to be read from the database then subsequently written to the sitemap.

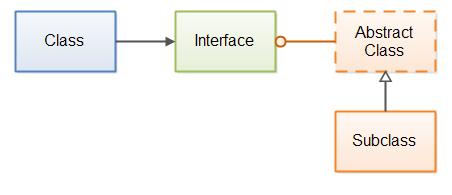

This is a common requirement, and Spring Batch provides built-in Interfaces to help perform these types of processing:

- ItemReader – Reads a chunk of data from a source; each data is considered an item. In my case, an item represents a place.

- ItemProcessor – Transforms the data before writing. This is optional and is not used in this example.

- ItemWriter – Writes a chunk of data to a destination. In my case, I add each place to the sitemap.

The Spring Batch API includes a class called JdbcCursorItemReader, an implementation of ItemReader which continously reads rows from a JDBC ResultSet. It requires aRowMapper which is responsible for mapping database rows to batch items.

For this step, I declare a JdbcCursorItemReader in my Spring configuration and set my implementation of RowMapper:

|

1

2

3

4

5

6

7

8

|

@Beanpublic JdbcCursorItemReader<PlaceItem> placeItemReader() { JdbcCursorItemReader<PlaceItem> itemReader = new JdbcCursorItemReader<>(); itemReader.setSql(environment.getRequiredProperty(PROP_NAME_SQL_PLACES)); itemReader.setDataSource(dataSource); itemReader.setRowMapper(new PlaceItemRowMapper()); return itemReader;} |

Line 4 sets the SQL statement to query the ResultSet. In my case, the SQL statement is fetched from a properties file.

Line 5 sets the JDBC DataSource.

Line 6 sets my implementation of RowMapper.

Next, I write my implementation of ItemWriter:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

public class PlaceItemWriter implements ItemWriter<PlaceItem> { private static final Logger logger = LoggerFactory.getLogger(PlaceItemWriter.class); private final String rootUrl; @Inject private WebSitemapGenerator sitemapGenerator; public PlaceItemWriter(String rootUrl) { this.rootUrl = rootUrl; } @Override public void write(List<? extends PlaceItem> items) throws Exception { String url; for (PlaceItem place : items) { url = rootUrl + "/place/" + place.getApiId() + "?searchId=" + place.getSearchId(); logger.info("Adding URL: " + url); sitemapGenerator.addUrl(url); } } public void setSitemapGenerator(WebSitemapGenerator sitemapGenerator) { this.sitemapGenerator = sitemapGenerator; }} |

Places in CheckTheCrowd.com are accessible from URLs having this pattern:checkthecrowd.com/place/{placeId}?searchId={searchId}. My ItemWritersimply iterates through the chunk of PlaceItems, builds the URL, then adds the URL to the sitemap.

Step 3

The third step is exactly the same as the previous, but this time processing is done on user profiles.

Below is my ItemReader declaration:

|

1

2

3

4

5

6

7

8

|

@Beanpublic JdbcCursorItemReader<PlaceItem> profileItemReader() { JdbcCursorItemReader<PlaceItem> itemReader = new JdbcCursorItemReader<>(); itemReader.setSql(environment.getRequiredProperty(PROP_NAME_SQL_PROFILES)); itemReader.setDataSource(dataSource); itemReader.setRowMapper(new ProfileItemRowMapper()); return itemReader;} |

Below is my ItemWriter implementation:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

public class ProfileItemWriter implements ItemWriter<ProfileItem> { private static final Logger logger = LoggerFactory.getLogger(ProfileItemWriter.class); private final String rootUrl; @Inject private WebSitemapGenerator sitemapGenerator; public ProfileItemWriter(String rootUrl) { this.rootUrl = rootUrl; } @Override public void write(List<? extends ProfileItem> items) throws Exception { String url; for (ProfileItem profile : items) { url = rootUrl + "/profile/" + profile.getUsername(); logger.info("Adding URL: " + url); sitemapGenerator.addUrl(url); } } public void setSitemapGenerator(WebSitemapGenerator sitemapGenerator) { this.sitemapGenerator = sitemapGenerator; }} |

Profiles in CheckTheCrowd.com are accessed from URLs having this pattern:checkthecrowd.com/profile/{username}.

Step 4

The last step is fairly straightforward and is also implemented as a simple Tasklet:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

public class XmlWriterTasklet implements Tasklet { private static final Logger logger = LoggerFactory.getLogger(XmlWriterTasklet.class); @Inject private WebSitemapGenerator sitemapGenerator; @Override public RepeatStatus execute(StepContribution contribution, ChunkContext chunkContext) throws Exception { logger.info("Writing sitemap.xml..."); sitemapGenerator.write(); logger.info("Done."); return RepeatStatus.FINISHED; }} |

Notice that I am using the same instance of WebSitemapGenerator across all the steps. It is declared in my Spring configuration as:

|

1

2

3

4

5

6

7

|

@Beanpublic WebSitemapGenerator sitemapGenerator() throws Exception { String rootUrl = environment.getRequiredProperty(PROP_NAME_ROOT_URL); String deployDirectory = environment.getRequiredProperty(PROP_NAME_DEPLOY_PATH); return WebSitemapGenerator.builder(rootUrl, new File(deployDirectory)) .allowMultipleSitemaps(true).maxUrls(1000).build();} |

Because they change between environments (dev vs prod), rootUrl anddeployDirectory are both configured from a properties file.

Wiring them all together…

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

<beans> <context:component-scan base-package="com.checkthecrowd.batch.sitemapgen.config" /> <bean class="...config.SitemapGenConfig" /> <bean class="...config.java.process.ConfigurationPostProcessor" /> <batch:job id="generateSitemap" job-repository="jobRepository"> <batch:step id="insertStaticPages" next="insertPlacePages"> <batch:tasklet ref="staticPagesInitializerTasklet" /> </batch:step> <batch:step id="insertPlacePages" parent="abstractParentStep" next="insertProfilePages"> <batch:tasklet> <batch:chunk reader="placeItemReader" writer="placeItemWriter" /> </batch:tasklet> </batch:step> <batch:step id="insertProfilePages" parent="abstractParentStep" next="writeXml"> <batch:tasklet> <batch:chunk reader="profileItemReader" writer="profileItemWriter" /> </batch:tasklet> </batch:step> <batch:step id="writeXml"> <batch:tasklet ref="xmlWriterTasklet" /> </batch:step> </batch:job> <batch:step id="abstractParentStep" abstract="true"> <batch:tasklet> <batch:chunk commit-interval="100" /> </batch:tasklet> </batch:step></beans> |

Lines 26-30 declare an abstract step which serves as the common parent for steps 2 and 3. It sets a property called commit-interval which defines how many items comprises a chunk. In this case, a chunk is comprised of 100 items.

There is a lot more to Spring Batch, kindly refer to the official reference guide.